This work explored generating realistic galaxy images using a large corpus of galaxy photos from the Galaxy Zoo dataset. At the time this was the largest generated set of galaxy photos.

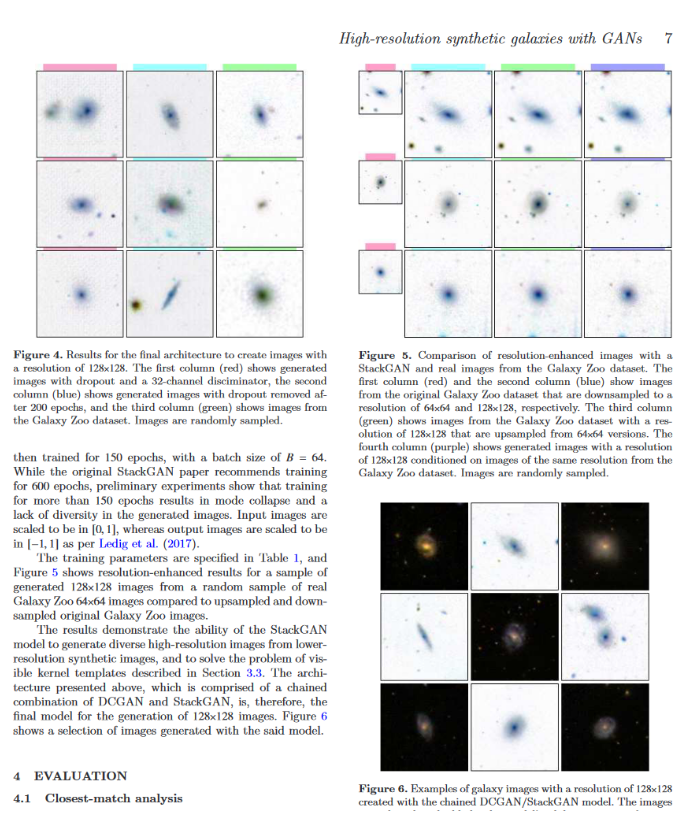

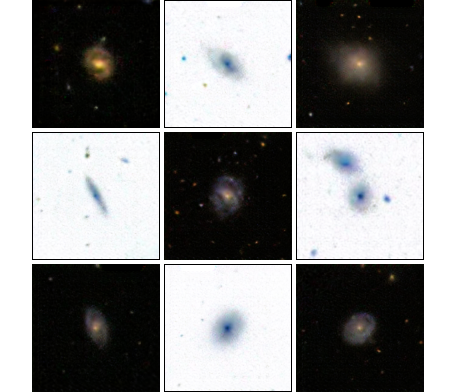

The generated images were not conditioned on any statistical properties of the galaxies. An architecture similar to StackGAN was used for first generate low-resolution images and a second generator scaled up the resolution. The novelty of our approach was to also include a pixel-based upscaling loss on the ground truth dataset for the second GAN model in the chain. The results were unique galaxies that were indistinguishable from real galaxies:

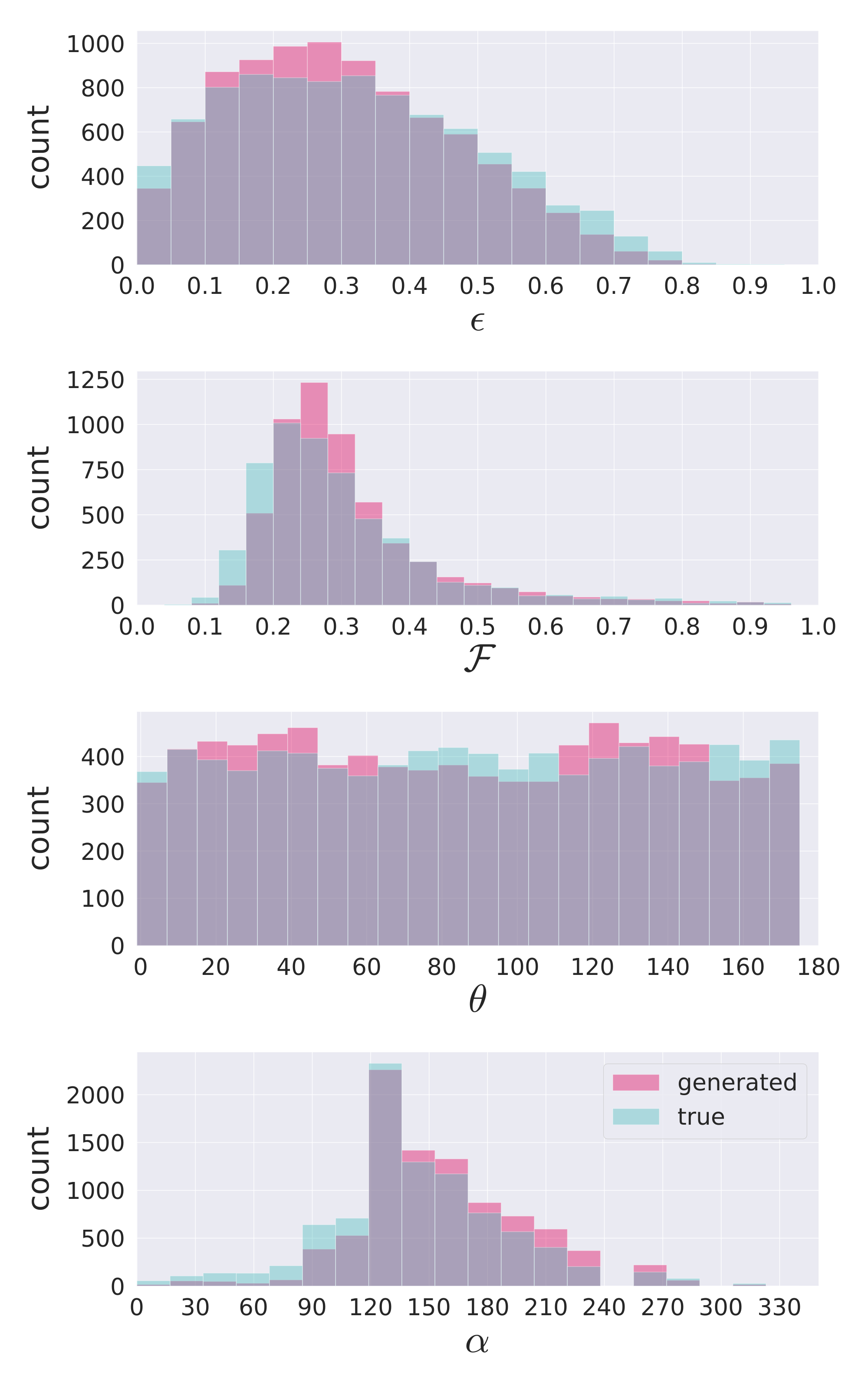

We then tested whether the distribution of four measured features of the galaxies matched the distributions for the real dataset. This was an indirect way to quantitatively determine the quality of the generated data for use in augmenting the data. The four plots below are for the four properties (top-to-bottom): ellipticity, relative flux, angle distribution, and the semi-major axis size.

A repository is available online where you can download the trained model and generate your own galaxies. Give it a try!

author = {Fussell, Levi and Moews, Ben},

title = "{Forging new worlds: high-resolution synthetic galaxies with chained generative adversarial networks}",

journal = {Monthly Notices of the Royal Astronomical Society},

volume = {485},

number = {3},

pages = {3203-3214},

year = {2019},

month = {03},

issn = {0035-8711},

doi = {10.1093/mnras/stz602},

url = {https://doi.org/10.1093/mnras/stz602},

eprint = {https://academic.oup.com/mnras/article-pdf/485/3/3203/28221657/stz602.pdf},

}